As it happens I try to render many voxels in my latest project Monolith (Project page is still pending, but you can look on github for it).

What is a Voxel? A voxel is a small volumetric element - it is a pixel in 3D. The nice things with them is that the logical arrangement in a 3D textures allows simple algorithms, e.g. for destructions. That is why I want to use them.

What is many? Well Monolith is a space strategy game based on voxels. Therefore spaceships and planets and solar systems and ... are made of voxels. To store the earth in 1 m³ large volume elements we would need 10^21 of them. Even if we store only one byte for each this requires 962081 PB RAM or disc space. So, many is as much as possible.

Which approaches do exist?

First of all I decided that I want to render voxels directly in the form of boxes. There are people how don't like that and yes there are algorithms as marching cubes to create continuous surfaces but I considered real cubes as the way to go. They allow much more dynamical updates because not every change causes a recomputation of complex meshes. Following Ideas can suffice for this task:

- Ray marching (see Gigavoxels [1])

It is possible to use octrees - which are the natural representation of voxel hierarchies - to efficiently ray-cast a 3D texture. But its not exactly what I want to do. All models in the game have there own dynamic transformation, hence there would be many relatively small 3D textures and I have to test which objects are hit by a ray before using a sparse octree traversal. This approach would probably not be realtime. - Static vertex buffers with many cubes

One could create quads for each surface side of a cube. Drawing static meshes is just the fastest thing a GPU can do. Updating the information is somewhat tricky because we need to know a neighborhood. I assume this to be fastest anyway but it requires plenty of space. It is possible, but not probable, that I benchmark that later. - Instancing

The advantage is that only a few data per cube is required on GPU. Since there is no need to store real vertices (only one real cube mesh and many instance information) this would be efficient. To update a local change only the instance information must be overwritten or masked and bus-traffic should be low.

Indeed I had a reference implementation for many instanced cubes but I wanted to test if this can be outperformed. - Geometry shader

This is what I did and I will explain it in detail.

My Geometry Shader Advanced Instancing

It is common practice to create billboards in form of quads inside a geometry shader. Why not whole cubes? Depending on the vertex normals (smoothed or per face) this requires either 16 or 24 vertices. Both are possible but effectively to large to be efficient. On the other hand we can decide if a cube is visible before any triangle is created. It happens that backface culling for a cube will always remove one of two opposite faces. Therefore my shader emits at most 12 vertices for 3 quads.

Spanning the cube

For a single voxel based model all cubes have the same size and rotation. One could compute some offset vectors to a voxel center in the shader, but since these vectors are equal for all instances they can also be precomputed. To avoid two vector-matrix transformations the vectors must be added in object space (before transformation) or in projection space (after transformation of the center). I did the second one.

Culling

In projection space frustum culling is easy. The homogeneous coordinate must be inside the unit volume:  . Multiplying this with

. Multiplying this with  gives the simple equations:

gives the simple equations:

|

1 2 3 4 5 6 7 8 |

vec4 vPos = vec4( x, y, z, 1 ) * c_mWorldViewProjection; // Discard voxel outside the viewing volume float w = vPos.w + max(max(length(c_vCorner000), length(c_vCorner001)), max(length(c_vCorner010), length(c_vCorner011))); if( abs(vPos.z) > w || abs(vPos.x) > w || abs(vPos.y) > w ) return; |

Then I added backface culling. This got a bit more complicated. I did not find a way to do this in projection space directly. A direction in projection space can be transformed to view space with a single multiplication (assuming you have the same projection matrix as I have) with (1/mProjection[0][0], 1/mProjection[1][1], 1/mProjection[2][2]) . As last trick I added a masking for rejecting faces which are "inside". If there are two neighbored voxels the two sides which are shared in between are never visible.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 |

// Decide for front or back face if( dot((c_vCorner000.xyz+c_vCorner110.xyz)*c_vInverseProjection.xyz, vViewDirection) > 0 ) { // Reject invisible faces inside the model if( (vs_out_VoxelCode[0] & uint(0x10)) != uint(0) ) { vec3 vNormal = normalize((vec4(0,0,-1,0) * c_mWorldView).xyz); gs_color = Lightning(vNormal, vViewDirection, shininess, specular, color, emissive); gl_Position = c_vCorner000 + vPos; EmitVertex(); gl_Position = c_vCorner100 + vPos; EmitVertex(); gl_Position = c_vCorner010 + vPos; EmitVertex(); gl_Position = c_vCorner110 + vPos; EmitVertex(); EndPrimitive(); } } else { // Reject invisible faces inside the model if( (vs_out_VoxelCode[0] & uint(0x20)) != uint(0) ) { vec3 vNormal = normalize((vec4(0,0,1,0) * c_mWorldView).xyz); gs_color = Lightning(vNormal, vViewDirection, shininess, specular, color, emissive); gl_Position = c_vCorner011 + vPos; EmitVertex(); gl_Position = c_vCorner111 + vPos; EmitVertex(); gl_Position = c_vCorner001 + vPos; EmitVertex(); gl_Position = c_vCorner101 + vPos; EmitVertex(); EndPrimitive(); } } |

Remarks: Of course you need to emit normals, texture coordinates or other stuff you need to colorize your voxel. I do lightning on voxel basis to reduce the export bottle neck. Further the code above is only for two of the six sides. It must be replicated for the other two directions.

Input

Geometry shaders aren't that fast, especially if the amount of data increases. I encoded the invisible masking and the position inside a single 32 bit integer value. There are 6 bits for the mask of each side and 3*8 bits for the position, the remaining 2 bits are not used currently. This allows to address 256^3 positions which leads to the need of a chunked approach for larger models.

Monolith is using a 32^3 chunk size only because, despite the technical necessity, this allows an efficient memory managment as well as easy LOD and large scale culling algorithms. More information might come in a future post.

Result

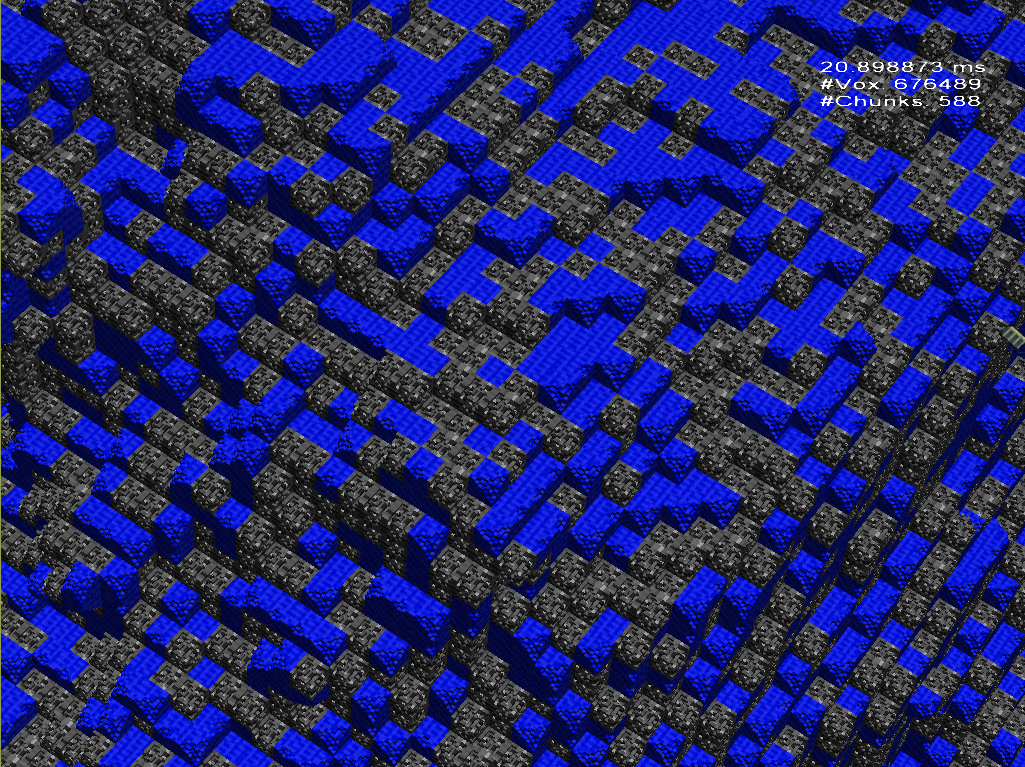

The image was made with an Geforce 640M LE. It draws 32368 voxels per millisecond. Let me know if you achieve higher rates with another approach!

I didn't test the code yet but I have got a NVidia 1050Ti with a 4ghz i7. Are you joking with your old school graphics card?

The benchmark is real.

In the shown scenario 676k vertices are drawn which is a lot but not the limit. Rendering a million poly model is possible with mobile graphics. According to this wiki table even the 635M has 506M triangles per second throughput.

Secondly, only the visible ones create visible triangles. There is nearly no overdraw and not much culling after the geometry shader. Therefore, rasterizer and ROPs have not much to do.

Did you see the second article of mine? Using hybrid approaches with ray-tracing are even faster.