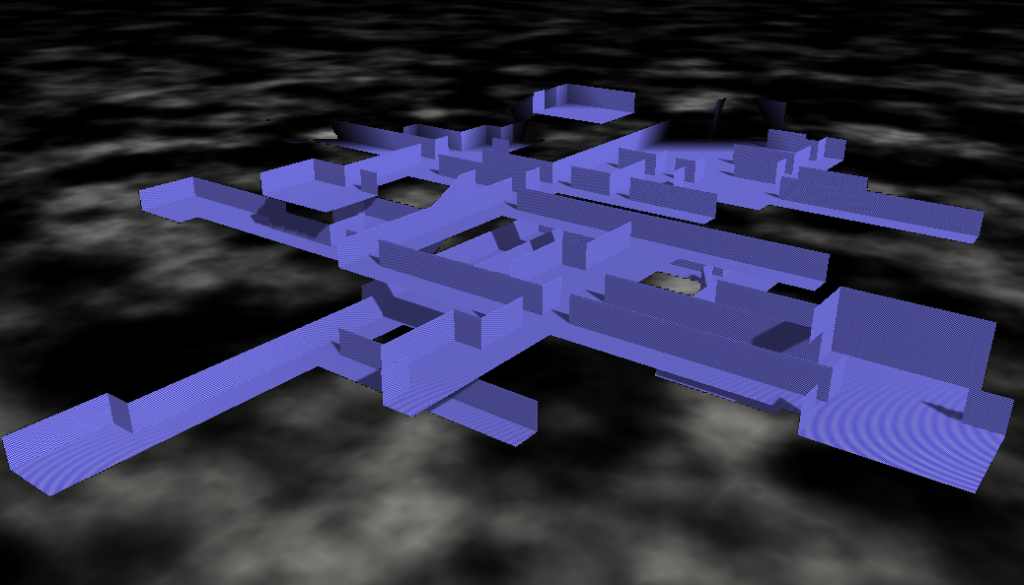

This weekend I wrote a simply labyrinth generator for Pathfinder. It is surprisingly easy to get interesting systems. Much easier than trees!

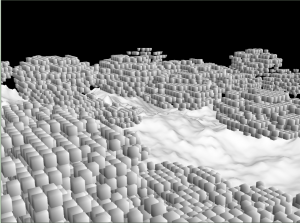

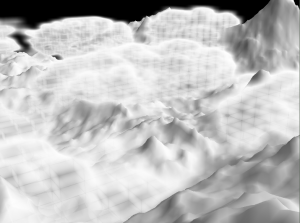

The first and most important decision I made was to use a voxel grid. To create a mesh immediately would cause problems with self intersections. So my idea was to create a volume texture and just set voxels to solid, corridor, stairs, ... After that one could use a standard extraction of implicit surfaces (Marching Cubes or Dual Contouring). I decided to use a specialised generator to hold the amount of triangles low.

Without the special case of stairs the idea is very simple: For each non-solid voxel sample its 6 neighbours. If there is a solid voxel there must be a wall on that side - create a quad.

Component Generator

The generator must fill the voxel grid with meaningful values now. Since I would like to have corridors and rooms and no natural shapes it is sufficient to use very large voxels where one single voxels is already a corridor/room.

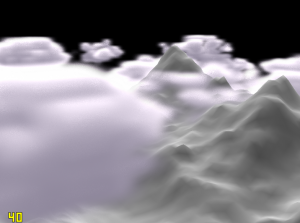

I divided the generator itself into two parts: The single components to describe a certain structure and a recursive method to create the full labyrinth. Each of the component generators return a position where further creations could be attached. My generator uses 3 basic elements:

- Corridors: Just set a line of voxels to "Corridor". A corridor has a random length in [2,10].

- Chambers: Create a larger empty volume centered at the entrance.

- Stairs: Create two diagonal voxels of type stair. The problem here is the triangulation.

There should be a slope between two floors but so far only axis aligned triangles are generated. I introduced a shearing for the stair voxels. So far so good but the resulting corridor will cross solid voxels (see image). That itself is no problem, but if an other structure intersects there this will cause an ugly triangle inside the room. To overcome this a stair structure locks its surrounding as solid.

There should be a slope between two floors but so far only axis aligned triangles are generated. I introduced a shearing for the stair voxels. So far so good but the resulting corridor will cross solid voxels (see image). That itself is no problem, but if an other structure intersects there this will cause an ugly triangle inside the room. To overcome this a stair structure locks its surrounding as solid.

An much easier approach would be to create vertical corridors and insert stairs as extra objects. It is a design decision that I would like to have these slopes.

The recursive part is doing the following thing: Chose one of the structures probabilistically. If it is "corridors" create 1-3 corridors, otherwise create exactly one of the chosen structure. Continue recursion at each returned attachment point. To stop the recursion a counter is decreased in each step. It does not directly count the recursion depth. Instead it is decreased by the number of created sub-structures. So if there are 3 corridors the counter is decreased faster to stop the recursion earlier. This should stabilize the "size" of the result. For a really stable size-controll-factor it would be necessary to divide the remaining number through the number of sub calls. On that way one could exactly create n structures where n is given in advance.

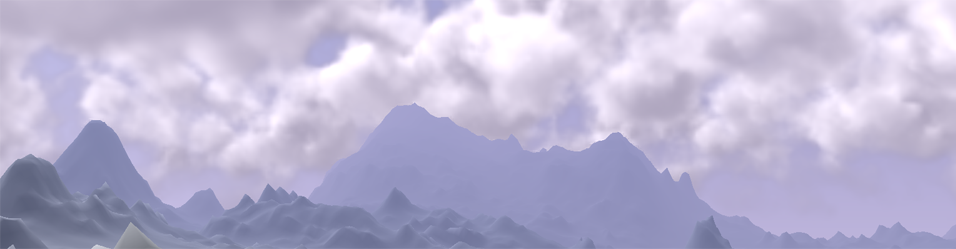

It should be said that the generator can create labyrinths of where different complexity. In the described approach floors are generated in each voxel grid level. There are many points where the structures intersect vertically. It is possible to jump down into the floor below and there are even circles in 3D where the common rule "always touch the wall with the same hand" does not succeed to leave the labyrinth. To make things easier I create stairs of length two so one voxel level is always skipped. Due to chambers it is still possible that there are vertical intersections but they are rare.

In conclusion it was a very good idea to use voxels in the first place. In feature I might use the component based generator approach for other things. One could even think of something hierarchically. For each substructure one can just ignore everything else and concentrate on a meaningful result.